Introduction: A New Era for Banking Technology

Banks today are at a crossroads. After decades of relying on rigid legacy core systems designed in a pre-digital era, financial institutions face surging demands for real-time, personalized services and ever-changing regulations. Customers now expect instant digital experiences, fintech competitors are rewriting the rules, and regulators push frameworks like open banking to spur innovation. Traditional core banking platforms – built for batch processing-centric operations – are struggling to keep up. Many banks tried to extend these old systems by adding APIs and middleware, but this only provided temporary relief and created complexity without addressing the root limitations. In fact, 55% of banks cite legacy systems as their top barrier to digital transformation, with maintenance consuming up to 70% of IT budgets. It’s clear that modernization isn’t optional – it’s become existential for incumbents.

Yet transforming core infrastructure is no simple task. Legacy environments are often described as “spaghetti”: decades of siloed products, bolted-on channels, and point solutions entangled in complex integrations.This patchwork yields poor customer experiences (fragmented across channels), high servicing costs, slow time-to-market, and mounting ripping and replacing a core system in one go is risky and can jeopardize business continuity, so banks have historically been cautious. Indeed, 69% of banks cite fear major barrier to deploying next-gen solutions. The result is that many banks still hesitate – an industry survey found 79% of banks are in only the foundational stages of their hybrid cloud journey (early in cloud adoption), even as nearly all acknowledge the need to move forward.

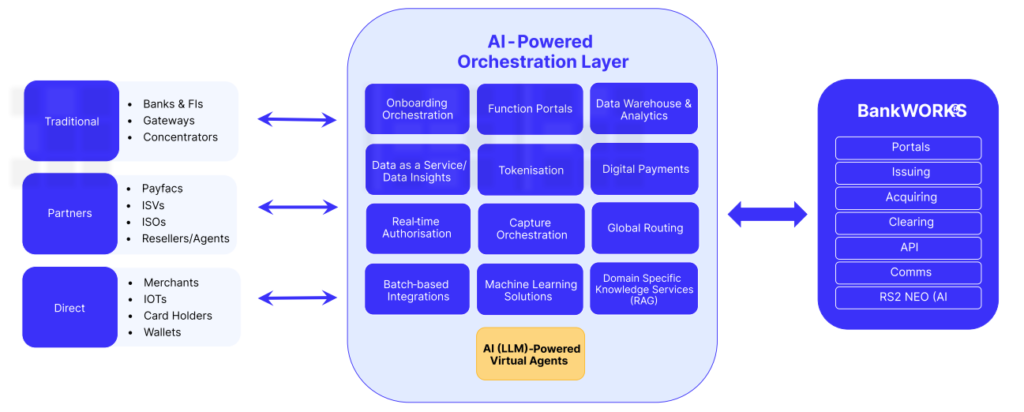

How can banks break this paralysis and accelerate innovation without “betting the bank” on a risky overhaul? The answer emerging across the industry is a progressive modernization strategy enabled by an AI-powered orchestration layer. Instead of continuing to sink resources into outdated cores – “keeping the foot on the gas toward a dead-end”, as one expert put it banks are shifting to a fresh ale approach. This means moving from channel-centric, monolithic setups to customer-centric, modular architectures. In practical terms, it involves decomposing legacy core functions into microservices and layering a modern orchestration platform on top. Think of it as inserting a “universal plug” that connects all systems and channels, allowing incremental upgrades with minimal disruption. By introducing an orchestration layer (sometimes called a digital integration hub or middleware platform), banks can link old and new systems into a unified workflow and gradually replace components step by step. This layer acts as a central intelligence hub that coordinates data and processes across the bank’s ecosystem. Crucially, it can leverage advanced technologies – like real-time analytics, machine learning, and even generative AI – to optimize every transaction and decision across the connected systems.

In this comprehensive deep-dive, we’ll explore how AI-enabled orchestration is transforming banking architecture. We begin by examining the challenges of legacy banking systems and how orchestration platforms address them. Then we look at how banks are applying machine learning and generative AI for real-time processing and smarter decisioning. We compare the modernization strategies of major global banks versus fintech-born firms, and analyze the competitive landscape of orchestration technology providers (from new cloud-native core platforms to incumbents offering modern layers). Real-world case studies will illustrate successful deployments of orchestration and the tangible benefits achieved – from fraud reduction and compliance automation to improved customer experience and scalability. We’ll also discuss the technical foundations (microservices, APIs, containerization) that make these orchestration layers possible, and outline a strategic roadmap for banks to progressively modernize their IT stack. Finally, we’ll explore future trends – such as embedded finance, open banking, autonomous finance, and hyper-personalization – and how an AI-driven orchestration approach positions banks to thrive in this fude is designed for product managers, technology leaders, banking executives, and strategists looking to navigate the journey of banking modernization. By blending deep technical insight with business context, we aim to provide a clear vision for orchestrating the bank of the future – one that is agile, intelligent, and customer-centric. Let’s start by understanding why legacy systems became such a roadblock, and how orchestration can pave the way forward.

From Legacy Spaghetti to Agile Orchestration: Challenges and Solutions

The Legacy Challenge: Traditional banks built their IT systems over decades, stacking new features and channels onto aging core ledgers and mainframes. The result is often a “black box” monolithic core surrounded by many satellite systems for online banking, mobile, payments, statements, and more. Each addition was integrated with custom code and middleware, leading to tangled dependencies – the infamous legacy “spaghetti.” While these systems are reliable workhorses, they are inflexible, costly to maintain, and increasingly incapable of meeting modern demands. For example, legacy cores typically process transactions in batches (end-of-day updates) and were not designed for the 24/7 real-time processing customers now expect. Their data is siloed by product, limiting the bank’s ability to get a 360° customer view or roll out cross-channel experiences. Changes to legacy systems are notoriously slow and risky – even minor updates require careful coordination and testing, because a small glitch in the monolith can bring down an entire system. Moreover, the cadre of COBOL and mainframe specialists needed to support these systems is shrinking as experts retire. In short, legacy cores have become “dead ends” for innovation – pouring more investment into them is like accelerating toward a brick wall.

The business impacts of this legacy tech debt are significant. Banks with patchwork systems find it expensive to adapt to new regulations or market changes, because even compliance updates require touching many systems. Customer experience suffers when digital channels aren’t seamlessly integrated – for instance, a customer might have to navigate separate systems for online and mobile banking that don’t share data in real time, causing frustration. Product innovation is stifled; launching a new service or integrating a fintech partner can take months of development on legacy infrastructure. Meanwhile, nimble neobanks and fintechs built on modern tech are able to roll out features rapidly and capture market share. As one banking executive observed, “It’s a race against obsolescence” for incumbents stuck on aging cores. Indeed, surveys indicate banks recognize this risk – 93% of banking tech decision-makers say their future success hinges on choosing the right core platform, and only 2% believe they can afford to stick indefinitely with their legacy systems.

Why Orchestration Layers? Faced with these challenges, banks have debated whether to build a new core in-house, buy a package from a vendor, or partner with fintechs. How trade-offs, and a rip-and-replace core project can be akin to performing heart surgery on the bank – high risk and potential downtime. This is where the concept of progressive modernization with an orchestration layer comes in. Instead of immediately replacing the legacy core, the bank introduces a new orchestration platform above the core. This smart layer can connect to all existing systems (core banking, card processors, payment gateways, channels, etc.) via APIs or messaging and acts as a central coordinator for processes and data. Think of it as an air traffic controller ensuring all the bank’s “planes” (systems) operate in sync, but also as a hub that can inject new intelligent services into the flow.

By decoupling customer-facing services from the legacy core, an orchestration layer lets the bank innovate rapidly at the edges without disturbing the stable core processing. The bank can gradually migrate functionality into the orchestration layer or surrounding microservices. For example, instead of trying to modify a legacy core to support a new real-time payment scheme or mobile wallet, the bank can implement that in the orchestration layer which interfaces with the core’s existing capabilities. This “hollowing out the core” over time relieves load from the legacy system and moves new workloads to modern infrastructure. Importantly, an orchestration layer enables data from disparate systems to be unified, giving the bank a centralized view of customers, transactions, and risk metrics for the first time. With this, banks can glean insights that were previously buried and then feed those insights back into front-end experiences or operational decisions.

Modern orchestra with built-in tools for monitoring, security, and compliance. They can standardize messaging formats across systems (for instance, translating various legacy formats into a common schema like ISO 20022) and consolidate logging and audit trails. A practical example: a global bank can use an orchestration layer to consolidate payments messaging onto the ISO 20022 standard ahead of regulatory deadlines, creating a single source of truth for all transactions and simplifying compliance reporting. At the same time, the orchestration layer can enforce security and data privacy rules centrally, which is vital when exposing APIs or integrating external partners. Banks are understandably worried about data security when introducing AI and cloud services – many initially even banned employee use of external AI tools like ChatGPT due to privacy risks. An orchestration layer can alleviate this by containing AI modules within the bank’s controlled environment (for example, deploying machine learning models in a private cloud so that sensitive data never leaves the bank’s perimeter). In summary, the orchestration approach lets banks “plug in” new capabilities while ring-fencing core stability and security.

Progressive Modernization in Action: The beauty of an orchestration strategy is that it’s incremental. Banks can start with high-priority areas that deliver quick wins. For instance, a bank might first use an orchestration layer to streamline customer onboarding across channels (wrapping legacy KYC systems with a new API and adding an AI document verification service). This could significantly improve customer experience quickly, without a core replacement. Next, the bank could tackle payments routing through the orchestration layer – introducing intelligent transaction routing to optimize costs and approvals (more on that later). Meanwhile, parts of the core that are costly or inflexible can be slowly replaced by modern components behind the scenes, with the orchestration layer mediating interactions between new and old during the transition. This phased approach controls risk: core processes continue to run in parallel during migration, so if something fails in the new system, the bank can fall back to the old process, avoiding downtime.

Industry leaders are advocating this approach. A “progressive modernization” strategy means not sinking more effort into patching old systems (“duct-taping” as it’s often called), but rather adopting a fresh architecture that allows step-by-step renewal. That might involve layering new microservices on top of the core to handle specific functions, one at a time. Over time, the legacy core’s role is minimized to a system-of-record, while the orchestration layer and microservices handle all customer engagement, business logic, and integration. This approach was summed up by a banking platform provider: “ditch the spaghetti and envision a single integration layer that allows incremental modernization without major disruption”. In essence, orchestration is the path to gradually achieve a streamlined, agile core – an evolution, not a revolution.

In the following sections, we will dive deeper into how exactly these orchestration layers leverage advanced technologies are executing such strategies, and what solutions are available in the market to enable it. First, let’s look at one of the game-changers in modern orchestration: the infusion of artificial intelligence and machine learning to create truly intelligent banking processes.

Real-Time Intelligence: GenAI and Machine Learning in Transaction Orchestration

One of the biggest advantages of introducing an orchestration layer is the opportunity to infuse AI and machine learning (ML) into banking operations in a coordinated, real-time manner. Legacy systems typically had very limited AI capabilities – perhaps a basic fraud rules engine here or a credit scorecard there – operating in silos. An AI-enabled orchestration platform, by contrast, can act as the “brain” of the bank, ingesting data from all connected systems and applying intelligence to every transaction as it flows through. This is transformative: it allows banks to make smart, instantaneous decisions on things like approvals, routing, risk, and customer interactions, rather than relying on static rules or after-the-fact batch processes.

The Rise of AI in Banking Operations

Banks are increasingly recognizing AI/ML as key to competitiveness. According to a recent industry study, 68% of banks plan to implement AI into their core systems within the next few years, whereas only ~32% have done so to date. The motivation is clear – AI can sift through vast datasets, detect patterns and anomalies, and even predict future behavior far better than traditional programming. In areas like fraud detection, credit underwriting, marketing, and customer service, machine learning models are outperforming legacy approaches and unlocking new possibilities.

Perhaps the hottest topic is Generative AI (GenAI) – models like GPT that can generate human-like text, answers, or even code. While early excitement has been about chatbot use cases, GenAI’s utility in banking goes beyond just conversing with customers. For example, generative AI can analyze unstructured data (like customer emails or transaction memos) and extract insights, or even simulate scenarios for risk analysis (e.g. generating synthetic fraud patterns to test systems). In fact, generative AI is seen as a transformative force in fintech: it can analyze behavioral and transactional data to enable hyper-personalized services tailored to each customer. It’s also being explored for fraud prevention, where GenAI can simulate attack tactics to help banks proactively shore up defenses. On the compliance side, large language models can help streamline Anti-Money Laundering checks by rapidly reviewing transaction histories and flagging suspicious narratives, thus automating complex KYC/AML processes that once took armies of analysts.

However, to fully leverage AI in real time, banks need an architecture that can inject these models into transaction flows without causing latency or chaos. This is where orchestration shines. Instead of AI modules being bolted onto one system at a time, the orchestration layer can centrally orchestrate AI-driven checks and actions across all processes. For instance, as a payment transaction passes through the orchestration hub, one service can call a fraud-scoring ML model, another can call a credit risk model (if it’s a loan transaction), and another could query a chatbot to generate a personalized message for the customer – all in parallel, within a few milliseconds. This coordinated approach ensures the AI interventions are consistent and based on the holistic data available in the hub (as the orchestration layer sees data from multiple systems).

Intelligent Transaction Routing and Optimization

A compelling use case of AI in orchestration is dynamic transaction routing. Traditionally, payment routing (deciding through which network or processor to send a payment) is rules-based and static. Banks might have default routes and backups if one network is down, but it’s not optimized in real time. With ML, an orchestrator learns from past transaction outcomes and network conditions to route each payment along the optimal path. For example, the AI can consider factors like: which processor or card network has the highest approval rate for this card type? Which route has the lowest fees or best FX rate for an international payment? If a certain issuer tends to decline transactions above a threshold, perhaps try an alternate route first. By leveraging machine learning models on historical transaction data, the orchestrator can make these decisions on the fly.

The benefits are significant: higher success rates, lower costs, and faster processing. Intelligent payment orchestration can reduce transaction failures and declines, improving conversion rates for payments by proactively avoiding known points of failure. It can also cut fees – for instance, routing a transaction through a network with lower interchange or avoiding unnecessary currency conversions. One real-world example is in e-commerce payments: merchants often use payment orchestration services to increase the percentage of approved transactions by retrying failed authorizations via alternate paths (a concept known as smart retry or decline recovery). Banks can apply the same internally. An AI-enabled orchestration layer might detect that a card transaction is likely to be declined by the issuer due to fraud rules; before simply passing on the decline, it could automatically request additional authentication or seamlessly offer the customer an alternate payment method. This kind of smart decline recovery has been shown to significantly reduce false declines, thereby recapturing revenue that would otherwise be lost.

In terms of cost optimization, consider foreign payments: the AI can choose the routing that minimizes foreign exchange spreads or uses the bank’s own internal FX rates when favorable. Over millions of transactions, these optimizations add up to substantial savings and better pricing for customers.

AI-Driven Fraud Detection and Risk Scoring

Fraud and security is another domain greatly enhanced by AI in orchestration. Legacy fraud systems often rely on static rules (e.g. block a card after 3 failed PIN tries, flag transactions over $10k). Today’s fraudsters continually evolve tactics, making static rules insufficient. Machine learning models, on the other hand, excel at analyzing behavioral patterns and spotting anomalies that indicate fraud. An orchestration layer with AI can analyze every transaction in real time against learned patterns of fraud. For example, an AI model can generate a dynamic risk score for each transaction within milliseconds. If the score is high (indicating likely fraud), the orchestrator can automatically route the transaction through step-up authentication (like requiring an OTP or biometric verification) before allowing it. If the score is low, the transaction sails through, minimizing friction for legitimate users.

This approach dramatically improves both security and customer experience: banks see lower fraud losses and chargebacks, while genuine customers are less often inconvenienced by false alarms. As the RS2 orchestration white paper noted, ML-based fraud engines “help prevent fraud in real time without disrupting genuine transactions, reducing friction and maximizing approvals.”. A practical outcome is lower false positives – fewer cases where a good transaction is wrongly flagged. (Banks historically err on the side of caution, generating many false fraud alerts that annoy customers; AI can tighten the net.) Industry analyses have found AI-based systems can reduce false positive rates in AML and fraud detection by 20-50%, saving investigation costs and improving accuracy.

A case in point: Isbank, one of Turkey’s largest banks, undertook a fraud orchestration overhaul with an AI platform (FCase) and drastically reduced fraud incidents while future-proofing their operations. They combined modern and legacy fraud systems under one orchestration to get the best of both – harnessing new AI tools alongside existing rule-based systems This kind of layered approach – legacy rules plus AI models governed by an orchestration layer – can yield a multi-tier defense. The system consolidates fraud and risk data from across all products (cards, online banking, mobile payments, etc.), enabling enterprise-level AI risk scoring. Without orchestration, each channel might only see its own data and miss the bigger picture (e.g. multiple accounts being attacked in a coordinated way).

Generative AI and Conversational Banking

Beyond behind-the-scenes optimization, AI in orchestration opens up new customer-facing capabilities. For instance, AI-powered virtual assistants and chatbots can be integrated into banking processes to handle customer inquiries, guide users through transactions, or even initiate processes automatically. Modern conversational AI, often powered by generative language models, can resolve many routine queries without human intervention – from helping a customer reset their password to answering questions about aed through the orchestration layer, these chatbots can seamlessly fetch information from various systems (account balances, recent transactions, loan offers) and perform actions (like transferring funds or disputing a charge) on the customer’s behalf. An orchestration platform could route a customer’s request to an AI agent first, only escalating to a human rep if needed, thus reducing support costs and response times.

We are already seeing banks deploy such capabilities. For example, Bank of America’s “Erica” chatbot has served millions of customers with AI-driven financial assistance. Newer generative AI agents promise even more – they can use the bank’s knowledge base to answer complex questions, generate personalized financial advice, or help internal staff by summarizing reports and suggesting solutions (through techniques like retrieval augmented generation, RAG). In an orchestrated architecture, a generative AI hub might sit as one of the services that any channel can call. If a customer asks a tricky question via chat or voice, the orchestration layer invokes the GenAI service which parses the query and produces a helpful response by drawing on the bank’s data (securely). For example, “What were the highest expenses on my credit card this quarter and how can I save?” – the AI could analyze transaction data, identify top spending categories, and generate a tailored tip (all on the fly). The orchestration layer ensures the AI has access to the needed data and that its response can trigger any recommended actions (like setting up a budget alert if the customer agrees).

Generative AI can also assist internally. Imagine an operations analyst using an AI assistant that’s plugged into the orchestration layer: they could ask in plain language, “Show me any unusual spike in payment failures in the last hour and possible causes.” The AI could sift through system logs, detect an outage at a particular payment network, and respond with a summary – something that would take a human hours. This kind of autonomous or “agentic” AI – where AI not only answers but can take initiative – is on the horizon. So-called Agentic AI systems can perceive context, make decisions, and collaborate with other agents without constant human prompts. In the context of banking, an autonomous AI agent might dynamically rebalance liquidity between accounts, or proactively reach out to a customer if it predicts they will overdraft, offering a small loan – all automated. Research suggests this “autonomous finance” could greatly improve efficiency and financial inclusion, although it must be carefully governed.

All of these AI use cases – whether machine learning for routing and risk, or generative AI for conversations and decisions – rely on having fast, reliable access to data and actions across the bank. That is precisely what an orchestration layer provides. It becomes the canvas on which AI paints new value. A key point is that AI models themselves can be continuously trained using the rich data flowing through the orchestration layer. For example, as the orchestrator handles transactions, it can log outcomes (approved/declined, fraud/not fraud, customer satisfaction scores, etc.) and feed that back into training datasets to improve the models. This creates a virtuous cycle of learning. The endgame is a bank that operates not just by static policy, but by continuous learning and adaptation – an AI-driven bank. As one report noted, AI in fintech is driving a redefinition of financial services, enabling real-time transactions and self-service in line with consumer expectations.

Before we explore specific implementations at various institutions, let’s summarize the AI capabilities an orchestration approach unlocks:

- Smarter Routing & Decisions: The ability to route transactions optimally (for success rate and cost) using ML predictions.

- Fraud/Risk Scoring: Real-time fraud detection with dynamic risk scoring and adaptive authentication when needed – leading to lower fraud and minimal customer friction.

- Automated Compliance: AI performing AML checks, sanction screening, and anomaly detection in compliance, vastly reducing false positives and manual work.

- Predictive Insights: Analytics that predict customer behavior (churn likelihood, product needs) so that banks can take proactive actions. E.g. the orchestration layer noticing a business’s cashflow issues and alerting a manager to offer assistance.

- Personalization: On-the-fly tailoring of offers, messages, and user interface based on AI analysis of the customer’s data. For instance, offering a personalized loan rate or financial tip right when the customer might need it.

- Conversational AI: Seamless integration of chatbots/voice assistants that handle support and even transactions (“conversational banking”), using GenAI to make them more natural and helpful.

- Operational AI: AI helping IT and ops teams by monitoring system health (often called AIOps). E.g. detecting performance bottlenecks or predicting system failures before they happen, then orchestrating preventive measures (like scaling up resources). One example: an AI optimization platform that automatically allocates servers to handle peak volumes and spots performance issues early.

- Autonomous Finance: Early steps toward automation of financial decisions – algorithms moving money or adjusting portfolios for customers based on their goals and real-time data, under proper oversight.

The promise is that AI-enabled orchestration layers transform a bank into a living, learning organism – far removed from the static legacy processes of the past. Banks can thereby deliver the kind of smart, instant, and contextual experiences that customers increasingly expect in the age of AI.

With an understanding of these technological capabilities, let’s now see how different players in the industry are approaching orchestration. Traditional banking giants and upstart fintechs often have very different starting points – legacy incumbents must retrofit or gradually build anew, while fintechs started fresh on modern stacks. How do their orchestration strategies compare, and what can we learn from each?

Orchestration Strategies: Big Banks vs. Fintech Born-Digital

The path to a modern, orchestrated architecture can look very different for a 100-year-old global bank versus a fintech that launched 10 years ago. In this section, we’ll compare how major incumbent banks like JPMorgan Chase and HSBC are tackling core modernization (often via orchestration or new platform initiatives), versus how fintech and “neobank” players like PayPal, Stripe, Adyen, and Revolut architected their systems from the ground up for agility. Understanding these strategies provides insight into best practices and common pitfalls on the modernization journey.

Global Banks: Modernizing from Legacy to Cloud Core

JPMorgan Chase – the largest bank in the US – provides a headline example of an incumbent making a bold core transformation. In 2021, JPMorgan announced it is replacing its US retail core banking system with a cloud-native core from Thought Machine (Vault). This is a marquee move: swapping out a legacy core that handles tens of millions of accounts is enormously complex. Rather than attempt a risky overnight switch, JPMorgan’s strategy is likely a phased migration. Thought Machine’s Vault platform is built as a collection of microservices and allows banks to run new products on it while the old core still runs existing products. Over time, more and more accounts and product processing can be migrated to Vault, orchestrated alongside the old core until the legacy can be decommissioned. The end goal for JPMorgan is to move away from siloed, product-specific systems to “a more fluid universal platform where all banking products operate on a single system in real-time.” In other words, to achieve a unified core that handles deposits, loans, payments, etc., with real-time data across the board – exactly the kind of integrated future many banks aspire to. Notably, JPMorgan’s move signals to the industry that cloud-native core tech is mature enough for even the biggest institutions. It also demonstrates how an incumbent can partner with a fintech vendor (Thought Machine, in this case) to leapfrog in technology rather than trying to build everything in-house.

JPMorgan has also shown a multi-pronged approach: while revamping the core, they launched Chase UK, a digital bank in the UK, built entirely on a modern stack. In fact, Thought Machine’s Vault was reportedly used in the Chase UK launch. This approach – launching a separate digital bank or greenfield project – is a common strategy for incumbents to test new technology in a contained environment. Standard Chartered did this with Mox Bank in Hong Kong (built on Thought Machine and other cloud services), and HSBC did similar with a digital offshoot in the past. The lessons learned and systems built can then be extended back into the larger organization.

HSBC, one of the world’s largest banks, has a vast, globally federated IT estate. Its modernization has involved strategic partnerships and targeted platform upgrades. For instance, HSBC has partnered with cloud providers like AWS and Azure to shift many workloads off mainframes. A notable example is HSBC’s PayMe for Business app in Hong Kong, which was built entirely cloud-native on Microsoft Azure. HSBC’s tech team delivered this mobile payments app in just months by using fully managed Azure services and a microservices architecture orchestrated with Kubernetes. The result: 98% of transactions on PayMe for Business complete in under 0.5 seconds – a testament to the performance of a modern cloud setup. This shows that even a large incumbent can achieve fintech-like speed and user experience when building new services on an orchestration-friendly architecture. HSBC essentially treated PayMe as a digital start-up within the bank, decoupling it from legacy systems (though integrating as needed via APIs). The microservices were each built to be independent and scalable, with their own databases and event hubs for communication. This isolation is exactly how to ensure reliability: one service can be updated or can fail without taking down others – a sharp contrast to monolithic systems.

At the core banking level, HSBC has taken a gradual approach: for example, its private banking division migrated to a modern core (Avaloq system) for wealth management operations, while retail banking cores are being progressively modernized. HSBC also invested in Oracle Cloud@Customer for some on-premises cloud infrastructure – allowing them to run scalable cloud databases in their own data centers to meet data residency and latency requirements. This highlights a common theme for big banks: hybrid cloud deployments. They want the elasticity and service features of public cloud, but need to keep certain sensitive data on-prem or in private clouds. Orchestration layers help manage this hybrid environment by abstracting the physical location of services. A bank’s orchestration platform can call a microservice whether it’s running in AWS, Azure, or the bank’s own data center – the logic is in the integration, not hardcoded to a server.

In short, large banks like JPMorgan and HSBC are employing a mix of strategies:

- Selective Core Replacement – Adopting next-gen core banking engines (often from fintech vendors) and phasing migration.

- Greenfield Digital Banks – Standing up new customer propositions on modern tech stacks to pilot the future architecture (e.g. Chase UK, SCB Mox).

- Partnerships for Cloud and Fintech Services – Using cloud providers for scalable infrastructure and tapping fintech solutions for specific needs (e.g. partnering with enterprise fintechs for KYC, fraud, etc., integrated via APIs).

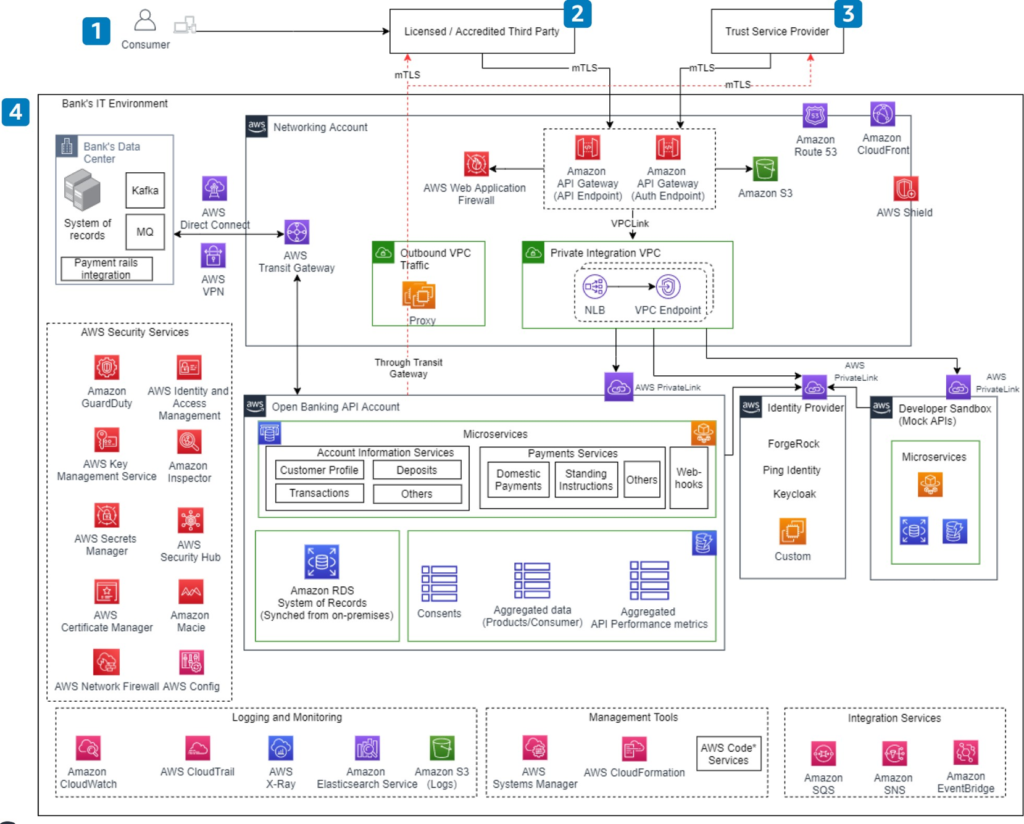

- Layering and APIs – Building API gateways and orchestration layers to connect new components with old. For example, many banks have enterprise API layers to facilitate open banking compliance and to let internal developers access legacy functionality in a standardized way. HSBC, for instance, leveraged AWS to host its open banking API platform, enabling rapid development of new microservices behind those APIs.

The key for incumbents is managing risk: keeping customer trust and operational integrity while transforming. That’s why progressive layering via orchestration is attractive – it minimizes big bang cutovers. When done well, the result is a bank that can say yes to new opportunities quickly. As an illustration, after modernizing much of its tech, Capital One in the U.S. famously shut down its last mainframe and became fully cloud-operated around 2020, allowing it to spin up new services faster and even offer its tech platform to others. Similarly, Spain’s BBVA undertook a decade-long tech transformation in the 2010s (with APIs and cloud at the core) and saw huge growth in digital customers and transactions. These banks exemplify that incumbents can transform, but it requires top-down commitment, talent re-skilling, and often new tech partnerships.

Fintechs and Challengers: Born in the Cloud

Fintech companies and digital-native banks have had the advantage of starting from scratch with modern architecture. They didn’t have legacy cores to contend with, so they built on cloud and microservices from day one. However, that doesn’t mean they have it easy – scaling to millions of users and expanding product lines present challenges of their own, and many early fintechs had to iterate their architectures.

PayPal, one of the original fintechs , actually provides a story of evolution. In its early years, PayPal’s platform grew quickly and reportedly became a monolithic codebase that was difficult to scale by the mid-2000s. Over time, PayPal migrated to a modular architecture, using techniques like splitting out services and adopting more modern stacks (including Node.js for certain front-end components). By the 2010s, PayPal’s systems were far more scalable and distributed, enabling them to handle hundreds of millions of transactions especially after their separation from eBay. They also heavily use AI – for example, PayPal’s fraud detection (and their subsidiary Braintree’s systems) use machine learning at massive scale to make instant risk decisions on payments across the globe.

Stripe, founded in 2010, started from a clean slate with an API-first approach. Stripe’s product from the outset was a unified payment processing platform delivered via simple APIs for developers. Under the hood, Stripe built a highly scalable infrastructure (largely on AWS) that is broken into many services – handling everything from storing card details (with high security) to an AI-based fraud system called Radar. Stripe is known for its engineering prowess and culture of simplicity; for example, they emphasize having a single source of truth for data and ensuring every new service integrates into a cohesive data model. This echoes the orchestration philosophy: even if you have many microservices, you present a unified interface and experience. Stripe’s platform has expanded to include billing, treasury services, identity verification, etc., yet they maintain consistency through strong internal APIs and an “orchestration” of services behind the scenes that appears as one Stripe to customers.

Adyen, a Dutch payments company founded in 2006, is often cited as an example of a single, unified platform approach. Adyen consciously built all its technology in-house, as one integrated system – it did not grow by acquiring other vendors, so it avoided the patchwork issue altogether. Adyen’s platform handles the entire payment lifecycle: gateway, risk checks, processing, acquiring, issuing, settlement – all on a common backbone. They even manage their own data centers and hardware for some components to optimize performance. The result is exceptional reliability and control. Adyen can introduce features across the stack seamlessly (for example, when they add a new payment method, it works globally and data flows into the same risk engine and reporting). A description from a recent analysis: “Adyen offers a single in-house developed solution that manages the entire payments lifecycle, including gateway, risk management, processing, issuing, acquiring, and settlement, supporting 100+ countries.”. In other words, Adyen itself acts as an orchestration layer for merchants, abstracting away the complexity of multiple payment systems into one platform. For Adyen to achieve this, they designed the system from the start with modular components but tight integration. They also favor open-source and build many tools themselves (rather than relying on third-party software), which ensures each part is tailored to fit the overall architecture. The “single platform” philosophy is a competitive advantage: it yields flexibility, agility, and cost savings that come from not having to reconcile multiple systems.

Other fintech examples:

- Revolut, launched in 2015, and similar neobanks like N26, built their initial banking products on modern core banking SaaS platforms (Revolut and N26 both leveraged Mambu as their core banking engine for account and transaction management). This allowed them to get to market quickly without building a core ledger from scratch. They then heavily customized and added around that core. Revolut, for instance, developed a multitude of microservices for its app functionalities (spending analytics, crypto trading, etc.) and orchestrated them through a robust API layer. In fact, Revolut’s architecture is event-driven microservices connected via a streaming backbone, which many companies struggle to implement, but Revolut did successfully from early on. As Revolut’s co-founder (CTO Vlad Yatsenko) has discussed, they built a “vision of a new banking backend” using modern tools, dealing with challenges of scale as they grew millions of users. Interestingly, while fintechs start free of legacy, they face scale as the new challenge: some had to re-architect components when user counts and transaction volumes exploded. This happened to UK fintech Monzo for example – they had to split their core services as they hit certain limits. The advantage is that modern systems can be re-architected in-flight more easily than legacy (thanks to containerization, continuous delivery, etc.).

- Big Tech in Finance: Companies like Apple and Google have entered finance by embedding banking services into their ecosystems (e.g. Apple Card, Google Pay). They rely on partner banks behind the scenes (Goldman Sachs for Apple Card) but their tech approach is all orchestration – integrating the bank’s APIs into their user experience. These examples highlight embedded finance, which we discuss later, but they showcase modern API orchestration: Apple built an interface on iPhones that orchestrates credit card issuance, transaction handling, rewards, etc., while the core account is with Goldman’s systems. The user only experiences Apple’s smooth integration. This is a case of a tech company orchestrating a bank’s services in a way opposite to our main discussion, but it underscores how powerful good orchestration is in delivering value.

Key Takeaways: Fintechs teach us the value of:

- Cloud-Native Design: Use of stateless microservices, distributed databases, and cloud infrastructure from day one. For example, 10x Banking (a fintech core provider) built its platform entirely on stateless Java microservices orchestrated with Kubernetes, using Kafka for event streaming. This enables linear scalability and resilience. A quote from 10x’s tech description: “The 10x platform was designed from scratch as a stateless microservice platform. Microservices are orchestrated with Kubernetes and use PostgreSQL and Kafka… accessed via REST and GraphQL APIs.” – a blueprint for modern banking systems.

- Unified Data and Services: Fintechs avoid siloed databases; they often have one primary data lake or realtime data pipeline feeding all services. This means the “single version of truth” issue is solved – every service sees the latest data. Traditional banks are now trying to achieve similar by using data streaming and APIs to sync data between old systems, but fintechs started without that baggage.

- API-First Everything: Every function is an API (internal or external) – which makes integration and partnership easy. A bank like Citi has learned from this, establishing developer portals to offer APIs (e.g. for account info, payments) to fintech partners in an open banking style, essentially adopting the fintech mindset within an incumbent.

- Continuous Deployment and Experimentation: Fintechs deploy code updates very frequently (often daily or more), testing and iterating quickly. This is facilitated by microservices that can be updated independently (as Thought Machine noted, microservices allow each team to upgrade their service without waiting for others). Big banks are adopting DevOps and CI/CD too, but fintechs have had it baked in culturally. The result is faster improvement cycles and the ability to try new features on subsets of users (A/B testing) with low risk.

In practice, the line between bank and fintech strategies is blurring as we see convergence:

- Banks are trying to emulate fintech agility by implementing microservices, DevOps, and even launching BaaS (Banking as a Service) offerings where they expose their services via APIs for others to embed.

- Fintechs, as they grow, sometimes seek banking licenses or partner with banks to expand products (e.g. offering insured accounts, etc.), which means they interface with legacy banking systems too.

Both realize that orchestration and modular architecture are the only way to meet modern expectations. As a Forbes tech council article noted, over 90% of banks plan to increase cloud usage and many are adopting platform-as-a-service models to get the flexibility they need. And on the flip side, fintechs that once offered niche services are expanding into full-service platforms (e.g. payments companies adding lending, deposits), which requires orchestrating a broader set of services.

Having compared these strategies, we can glean that major banks often focus on integrating and phasing in new systems alongside legacy (via orchestration layers), while fintechs design for orchestration natively but later face integration needs as they expand. Both end up building what is essentially a banking ecosystem of services connected through a central orchestrating brain.

Next, we will look at the technology vendor landscape that supports these transformations. A number of platform providers – both long-standing core banking vendors and new cloud players – are offering orchestration and core modernization solutions. How do they stack up?

The Orchestration Platform Landscape: Key Players and Solutions

Banks undertaking modernization have an important decision: do they build their own orchestration platform or leverage a vendor solution (or a mix)? In practice, many banks use a combination – adopting a modern core or middleware product and then customizing around it. The market now offers a spectrum of competitive orchestration and core banking platforms, from fintech start-ups to established banking software giants. Let’s survey some of the notable players and how they contribute to the orchestration story:

- Thought Machine – Cloud-Native Core Engine. Thought Machine’s Vault platform is a high-profile newcomer (founded 2014) that provides a cloud-native core banking system. It’s built on microservices and uses a unique “smart contracts” approach for product configuration (meaning banks can write code to define products like loans or deposits). Thought Machine emphasizes eliminating the constraints of legacy – Vault runs in the cloud (any cloud or on-prem containers) and is designed to avoid the pitfalls of older cores. For example, Vault’s kernel is composed of around 20 microservices, each managed by a small team. This aligns with best practices: small, expert teams owning services for agility. Thought Machine is being adopted by both incumbents (JPMorgan, Lloyds, Standard Chartered’s Mox, etc.) and challenger banks, because it allows progressive migration. As an orchestration enabler, Vault exposes APIs for everything, so banks can integrate it with legacy components during transition. It’s a pure software play – banks run it themselves (or via partners like IBM). The success of Thought Machine indicates banks’ appetite for modern core solutions that can slot into an orchestrated architecture gradually.

- 10x Banking – “Meta-core” as a Service. 10x was founded by former Barclays CEO Antony Jenkins with the goal to provide a next-gen core banking and integration platform. The 10x SuperCore (sometimes called meta-core) is a SaaS platform that is cloud-native, covering payments, accounts, loans, etc. Technically, as we noted, it’s built entirely on stateless microservices orchestrated by Kubernetes, using Kafka for events. Each microservice in 10x’s platform has its own database and is accessible via REST/GraphQL APIs. This design gives both scalability and flexibility – banks can choose which components to use and integrate others via the API gateway. 10x is positioning itself for large banks wanting modern core capabilities as well as for fintechs as a BaaS provider. One example client is Westpac (a big Australian bank) which is using 10x for a banking-as-a-service offering. 10x’s recent partnership with Alloy (a fintech for identity decisioning) shows it’s curating an ecosystem of specialized services on its platform, effectively baking orchestration and third-party integration into the core. Notably, 10x’s approach to migration emphasizes a simple API entry-point architecture to minimize risk when moving from legacy. Also, 10x often highlights that it can remove many limitations of older and even some “neo-core” systems by offering better scaling and customization options. In summary, 10x provides a ready-made orchestrated core that banks can adopt instead of building their own microservices stack from scratch.

- Mambu – Composable Banking SaaS. Mambu is a leading SaaS core banking platform (founded 2011) known for its composable architecture. It was designed as a cloud service from the start and often used by digital banks, lenders, and fintechs for quick launch of products. Mambu’s philosophy is to be the core ledger and processing engine, while integrating easily with an ecosystem of other fintech services via APIs. In fact, Mambu provides an integration and orchestration layer out-of-the-box – it has a robust set of APIs for every function and even a workflow engine called the Mambu Process Orchestrator (MPO). This low-code orchestrator allows banks to connect Mambu with other applications (like a KYC service or a card processor) and define processes spanning them. Essentially, Mambu acknowledges it’s part of a larger solution and facilitates orchestration rather than trying to do everything itself. Mambu is used by many prominent digital banks (e.g. N26 in Germany, Tandem in the UK) and even big banks for certain lines of business (e.g. ABN AMRO used Mambu for a new lending offering). It excels in rapid deployment – banks can get a basic core with accounts, lending, deposits up and running in weeks, then compose additional features as needed. With Mambu, banks benefit from continuous upgrades (SaaS model) and a marketplace of pre-integrated partners (like for credit scoring, customer onboarding, etc.), which accelerates building an orchestrated ecosystem. Mambu is a strong choice when banks want cloud-native core functionality without managing infrastructure, and it plays nicely in an API-driven architecture.

- Temenos – Evolving an Industry-Leading Core. Temenos is a long-established core banking software provider (known for T24 core banking, now called Temenos Transact, and Temenos Infinity for digital channels). Temenos has pivoted heavily to become cloud-native and AI-enabled in recent years. They’ve re-architected their products into microservices and containerized components, allowing clients to deploy on any cloud or on-prem in a flexible way. Temenos emphasizes that event-driven microservices and open APIs are now at the heart of its platform, enabling easier integration with fintech partners and faster changes. A quote from Temenos: “By exposing the platform’s underlying banking capabilities as open API, event-driven microservices, Temenos helps banks adopt the latest technology while benefiting from market-leading functionality.”. They have also incorporated AI for things like automated code migration (their “Leap” program uses AI to automate upgrading legacy Temenos instances). Temenos’s advantage is its rich functionality from decades of banking experience – banks get a comprehensive suite (retail, corporate, wealth, payments, etc.) all on one codebase. In an orchestration context, Temenos can serve as both the core system and the integration hub if a bank goes all-in on it, or it can be one component in a larger mosaic. Temenos has even introduced Temenos Banking Cloud, a SaaS offering with pre-configured products and the ability to turn on features via APIs. This shows even incumbents are moving toward composable, orchestrated delivery. Thousands of banks use Temenos globally, and many are upgrading to its latest cloud version rather than switching to new players – showing the competitive push: legacy vendors evolving to meet the orchestration era head-on.

- Finastra – Open Platform and Ecosystem. Finastra (formed by the merger of Misys and D+H) provides core banking (Fusion Phoenix, Essence, etc.), payments, lending systems and more. Their key initiative for the future is FusionFabric.cloud, an open developer platform and app marketplace. Finastra has essentially opened up its core systems via APIs and invited fintech developers to build apps that plug into these cores – creating an ecosystem of third-party solutions that clients can adopt quickly. FusionFabric.cloud acts as an orchestration and innovation layer on top of Finastra’s core engines: fintechs can use sandbox APIs to develop, and banks can deploy those apps securely if they choose. This addresses a common need: banks using big vendor cores often want niche innovations (say, a new personal finance tool) – instead of building custom or waiting for Finastra to develop it, they can now plug in an app from the marketplace. A case: Allied Payment Network built a real-time bill pay solution and launched it on FusionFabric; a credit union integrated it to enable instant account-to-account transfers in 2 days (development that would normally take 10 weeks). This dramatic reduction in integration time – 10 weeks down to 2 days – shows the power of a well-orchestrated open platform. Finastra provides the orchestration glue (common authentication, API management, data integration) so that third-party apps can securely operate with core data. Essentially, Finastra aims to be the facilitator of a banking app store, where the orchestration layer is the cloud platform connecting banks and fintechs. This is a different take on orchestration – more about business orchestration and ecosystem – but critically important for agility. It turns a bank’s core into a flexible base that can quickly gain new capabilities. Alongside this, Finastra continues to modularize its own core offerings and containerize them for cloud deployments. They partner with cloud providers (e.g. Microsoft Azure) to offer their software in SaaS mode. For banks that use Finastra for core or payments, FusionFabric can serve as an orchestration layer that also extends to external fintech services, which can accelerate transformation significantly.

Other notable mentions in the landscape:

- FIS and FISERV – Very large core banking and payments providers. They have legacy cores but also offer newer cloud-based solutions (e.g. FIS’s Modern Banking Platform, Fiserv’s Finxact acquisition which is a cloud core). Many mid-tier banks rely on their tech. They are adding API layers and offering BaaS as well – for instance, Fiserv’s DNA core has an open architecture that some banks use in a best-of-breed approach.

- Oracle – Oracle’s banking suite has been refactored for microservices and Oracle Cloud. Oracle offers an integration hub and is pushing its database tech (with things like Oracle Autonomous Database) to modernize bank data management.

- SAP – Though not a big core banking player broadly, SAP’s banking services run in some large institutions and focus on orchestrating finance and risk data (especially for corporate banking).

- Smaller Fintech Platforms: Dozens of emerging BaaS and orchestration startups exist. For example, Banking-as-a-Service providers like Solarisbank in Europe or Green Dot’s platform in the US allow brands to embed accounts/cards via APIs – effectively acting as an orchestrated banking utility under the hood. Payment orchestration specialists (Spreedly, Apexx, etc.) help companies route transactions across multiple processors – a niche example of orchestration as a service. Fraud orchestration startups (like Feedzai, Hawk AI) allow banks to input data streams and orchestrate multiple fraud detection models.

The competitive landscape indicates a few trends:

- Convergence on Cloud-Native and Microservices: Every serious vendor has moved to offer a microservices, API-driven version of their platform. Those who haven’t are losing relevance. We see terms like “cloud-native, microservices-based” architecture in almost every product description now【. For example, Temenos’ cloud benchmark touts microservices on MongoDB with elastic scaling, and FIS markets its new core as cloud-native to counter newcomers. This is a big shift from a decade ago when cores were largely monolithic. It validates the microservices approach as the future for banking software. The benefit is continuous updates, easier integration, and containerized deployment (Temenos explicitly notes all services are packaged in containers for portability).

- Openness and APIs: Even traditionally closed systems are opening up. Open APIs and sandbox environments are now common – vendors know that their system must play well with third parties. This openness also allows for easier gradual adoption; a bank can implement a new vendor’s component alongside old ones by using APIs to integrate, rather than a full replacement.

- Embedded AI: Many platforms are embedding AI features – e.g., Temenos using AI for migration (Leap), Finastra’s FusionFabric enabling AI apps like Monotto’s savings bot, Oracle’s AML with AI reducing false alerts, etc. Some are even offering AI-as-a-service on their cloud platforms. This means banks can get AI benefits out-of-the-box rather than having to build all models in-house. It also means orchestration layers often come with AI monitoring tools (e.g. for fraud).

- Specialization vs. Consolidation: There’s a dynamic tension – some vendors try to offer an all-in-one solution (consolidation), while others focus on one piece and integrate (specialization). For example, Thought Machine and 10x try to cover core processing for all products (consolidated core), whereas a fintech like Zeta might focus just on credit card processing modernization and integrate with other systems for a full solution. Banks must decide if they want a primary anchor platform or an assembly of best-of-breed components. Orchestration allows either approach: one could orchestrate multiple specialized systems, or adopt one main system and orchestrate around it.

In summary, banks today have a rich array of platform choices to power their orchestration journey. Whether it’s a modern core (Vault, 10x, Mambu) or an open banking middleware (FusionFabric, etc.), the tools exist to break free of legacy constraints. The competitive pressure among these vendors is driving rapid innovation – which ultimately benefits banks by lowering the risk and cost of modernization. The decision often comes down to the bank’s specific context: a smaller bank might fully outsource core to a cloud platform, while a big bank might use an orchestration layer to mix an on-prem core, a cloud component for new products, and various fintech APIs.

Next, let’s look at some case studies and examples of banks that have successfully deployed orchestration and modern platforms. How did these projects unfold, and what benefits have they realized?

Case Studies: Success Stories in Orchestration and Modernization

Implementing an AI-enabled orchestration layer or modern core is a major endeavor. Here we highlight a few illustrative examples – across different domains like payments, core banking, and operations – where orchestration-driven approaches have delivered notable success. These case studies provide practical insights into the transformation journey.

Case Study 1: Barclays – Orchestrating Post-Trade Services with Camunda

Barclays, a global bank, provides a compelling example of using a process orchestration layer to modernize a critical part of its operations. In its investment bank, Barclays had a complex post-trade processing environment (for clearing & settling trades) that was burdened by legacy systems and fragmented processes. The legacy setup was monolithic, hard to change, and prone to manual exceptions and reconciliations that consumed significant time. This was especially problematic as trade volumes rose and new regulations (like shortening settlement cycles to T+1) demanded more speed and accuracy.

To address this, Barclays introduced Camunda 8, a cloud-native process orchestration platform, as a new layer to coordinate post-trade workflows end-to-end. Camunda allowed Barclays to model and automate the entire trade lifecycle (trade capture, validation, matching, settlement, etc.) as a series of orchestrated microservices and human tasks. Essentially, it sits on top of various systems (some new, some legacy) and ensures each step occurs in the right order, with proper error handling and visibility.

The results have been impressive. Barclays achieved much greater operational visibility and control over its post-trade processes. Staff can now see exactly where a trade is in the pipeline and where any bottlenecks are, thanks to Camunda’s tracking dashboards. Shakir Ahmed, a Director of Ops Tech at Barclays, noted that with Camunda, “it’s much easier for them to see what’s going on… they now have tools to see where exactly a trade is stuck”. This was a game-changer compared to the previous opaque system where problems could get lost in the cracks.

Camunda’s orchestration also enabled straight-through processing (STP) rates to improve by handling exceptions in a more structured way. Many tasks that previously required manual intervention were automated, reducing delays. For example, if a trade data mismatch occurs, Camunda can automatically trigger a request for data from a reference system or apply a predefined rule, rather than simply stopping and waiting for human review. When human input is needed (like approving an exception), the system routes the task to the right team and tracks it.

Moreover, Barclays could adapt to regulatory changes faster. When new rules for settlement or reporting came, they only needed to adjust the Camunda process models or add a microservice, rather than overhaul a monolithic app. This agility in regulatory compliance is crucial in post-trade, where rules are always evolving.

In short, Barclays’ case demonstrates that even for very complex, high-volume banking operations, implementing a dedicated orchestration layer (Camunda, in this instance) can yield significant benefits: higher resilience, transparency, efficiency, and scalability for the future. It’s a microcosm of the larger theme – decoupling processes from legacy constraints and using modern workflow automation to drive improvement. It also shows that not all orchestration is about external APIs and realtime payments; it can be equally about internal process automation.

Case Study 2: JPMorgan Chase – Phased Core Modernization with Thought Machine

We touched on JPMorgan’s strategy earlier – here we frame it as a case study of progressive core replacement. In 2022, JPMorgan Chase inducted Thought Machine into its Hall of Innovation and publicly committed to deploying Thought Machine’s Vault core across its retail bank. This is one of the most ambitious core modernization efforts ever, given Chase’s size (over 60 million customers).

The approach JPMorgan is taking appears to be a gradual migration product-by-product to the new core, orchestrated alongside the existing systems. For example, they might start by launching a new savings account product on Vault while existing accounts stay on the old system. Customers opening that product are on the new core (and get real-time experience, new features), and the orchestration layer connects Vault back to the old core’s general ledger and reporting systems so everything stays in sync. Over time, JPMorgan can migrate other account types and even entire customer segments to the new platform. By doing this progressively, JPM reduces risk – there’s no single “big bang” switch-over.

One tangible result cited is that this modernization will allow Chase to have all products on a single platform in real-time rather than siloed systems【. That means better cross-product insights (e.g. seeing a customer’s checking and credit card in one place), faster product development (design once on Vault vs. changing multiple systems), and potential cost efficiencies long-term (less duplication of infrastructure). While full results will play out over the coming years, JPMorgan’s early progress is encouraging enough that it expanded the project; in 2023 they also decided to use Vault for their UK digital bank.

An interesting angle is how JPMorgan’s orchestration architecture manages the coexistence of old and new. They have a home-built integration framework (often referred to as “service mesh” or API layer) that allows various systems to communicate. For instance, if a long-time customer with accounts on the old core opens a new account that’s on Vault, the orchestration layer must ensure that customer sees all accounts together in the mobile app and that things like debit card linking, statements, etc., work seamlessly. JPMorgan’s investment in API-led connectivity pays off here – essentially treating the legacy core and new core as two services behind a unified API facade for the channels. This way, the customer experience remains unified even during the transition. Over time, as more moves to the new core, the legacy’s role shrinks and eventually that API just points entirely to the new system.

The key success factor in JPM’s case is strong top-down commitment and engineering talent. They reportedly allocated a large team and resources (including acquisitions of fintech talent) to make this happen, and set realistic timelines. They also leveraged the vendor’s expertise closely – Thought Machine engineers working with JPMorgan’s – a model of co-creation that is often necessary in such large projects.For other banks, JPMorgan’s case sends a message: modern core technology is real and scalable, and you can execute a phased core renewal using orchestration to bridge old and new. It de-risks the journey; as one Thought Machine exec said, “It signals to the industry that cloud-native core tech is the future.”. While not every bank is as large or resource-rich as Chase, the principles of incrementalism and heavy use of APIs/integration hold true universally.

Case Study 3: Isbank – AI-Driven Fraud Orchestration Overhaul

Türkiye İş Bankası (Isbank), Turkey’s largest bank, undertook a comprehensive overhaul of its fraud management systems in the late 2010s. The challenge was to future-proof fraud operations for the coming decades by combining modern AI solutions with existing systems. They wanted to reduce fraud losses while increasing efficiency for investigators.Isbank implemented a fraud orchestration platform from a specialist vendor (FCase) to act as a central hub for all fraud-related processes. This platform integrated data and alerts from the bank’s legacy fraud detection tools and new machine learning models into one workflow. The orchestration platform would triage alerts, apply AI analytics to prioritize and enrich cases, and route them to investigators with a unified interface. Essentially, it sat between the detection systems and the human investigation teams.

The results were impressive: Isbank drastically reduced fraud incidents (exact figures weren’t public, but the bank indicated a significant drop in fraud write-offs) and also improved the efficiency of their fraud team. Investigators could do more with the same resources because the system automated so much of the case handling. By blending legacy rule-based systems (which the bank trusted and had tuned over years) with new AI pattern-recognition systems under one orchestrator, Isbank achieved a best of both worlds. The AI could catch new fraud patterns that rules missed, and the orchestration ensured no alert fell through the cracks, with consistent processes applied bank-wide.

An added benefit was scalability for future channels and products. As Isbank introduces new services (mobile wallets, etc.), they can plug those into the fraud orchestration layer rather than building separate fraud controls from scratch. The orchestrator provides a modular way to extend coverage – just add connectors or new analytic models and everything still feeds into the same case management flow. This is crucial as fraudsters constantly shift to whatever channel is weakest; a unified defense gives banks a fighting chance to respond quickly everywhere.

Isbank’s case highlights that orchestration isn’t only for customer-facing improvements; it’s also about internal process optimization and risk management. By automating and coordinating complex processes (fraud operations often involve multiple departments and data sources), banks can significantly reduce risk and cost. In technical terms, it shows the pattern of using an “orchestration hub” for AI and rules: letting AI models and rules each do what they do best, and an orchestration logic to decide when to use which, and how to consolidate their outputs.

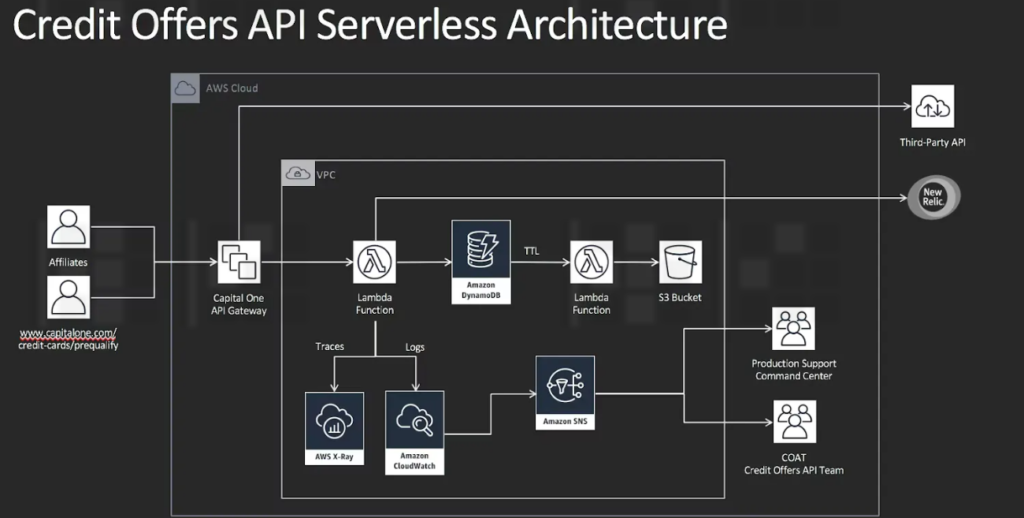

Case Study 4: Capital One Bank – Becoming a “Tech Company” with Microservices

Capital One in Singapore is often lauded as one of the most successful digital transformations of a large bank. Over roughly a decade (mid-2000s to mid-2010s), Capital One went from being seen as a traditional bank to being awarded “World’s Best Bank” multiple times. A key enabler was its early and aggressive adoption of cloud, microservices, and data analytics.

Capital One re-architected many of its systems using a microservices architecture deployed on a private cloud (and later hybrid cloud). They systematically broke down monoliths – for example, their customer-facing systems were refactored into microservices that could be updated more frequently. Capital One also embraced DevOps and site reliability engineering; they were one of the first banks to implement a DevOps toolchain at scale, allowing them to do thousands of software releases a year (a huge increase from pre-transformation days).

One concrete example: Capital One’s retail banking platform used to have quarterly release cycles, but after modernization, they moved to an on-demand release model for many components, with 90% of applications cloud-ready and able to be released in hours if needed. This agility manifested in how quickly Capital One rolled out new digital features like their “digibank” mobile app, or integrating services like budgeting tools and digital loan origination into their ecosystem.

Capital One also built an internal API gateway and developer platform that allowed different teams – and even external partners – to easily consume and offer services. When India’s UPI real-time payment system came, Capital One integrated it rapidly thanks to their API-ready architecture, grabbing a big market share in digital payments through their subsidiary digibank .

The outcomes: Capital One saw huge growth in digital customer engagement – by 2020, over 2/3 of their customers were active on digital channels, and digital customers were bringing in twice the income of traditional ones. Operationally, Capital One reported improvements like 85% reduction in deployment times and significantly fewer critical incidents due to more resilient microservices designs (if one service failed, it didn’t topple everything else). On the business side, Capital One launched entirely new businesses (like a standalone mobile-only bank in India, and a successful SME lending platform) very quickly, leveraging their modern tech backbone.

Capital One’s story is often summed up as “from bank to tech company”. Culturally, they transformed to think in terms of customer journeys, data, and platform ecosystems – supported by the tech that allowed those ideas to be executed in software rapidly. It’s a case study in holistic transformation: not just inserting a technology layer, but reorganizing teams, adopting agile methods, and even partnering with startups (they ran hackathons and fintech programs to infuse innovation).

For banks considering the orchestration and microservices route, Capital One provides confidence that it can lead to tangible competitive advantage. Their CEO has noted that technology was a major factor in Capital One doubling its market capitalization and being able to expand regionally with digital offerings. Essentially, Capital One created an internal orchestration capability – they can integrate any new tech (AI, blockchain, etc.) much faster now because their architecture is modular. For instance, they’ve been quick to experiment with AI in credit scoring and chatbots, embedding those into processes via APIs.

These case studies (Barclays, JPMorgan, Isbank, Capital One) each highlight different facets of the transformation:

- Barclays: Using workflow orchestration to modernize processes.

- JPMorgan: Using a modern core platform with orchestrated migration to overhaul the core banking engine.

- Isbank: Using AI orchestration for fraud to bolster security and efficiency.

- Capital One: Embracing microservices and APIs bank-wide to achieve digital leadership.

Common threads include strong top management support, focus on culture/process (not just tech), and an architecture that prioritizes modularity, APIs, and data usage. These examples also illustrate that while technology is key, people and process changes are equally important – something to remember in any strategic roadmap.

Speaking of roadmaps, let’s now discuss a generic but detailed roadmap for banks to implement orchestration and modernize their architecture, step by step.

Modernization Roadmap: From Siloed Legacy to Agile Orchestration

Modernizing a bank’s technology architecture through orchestration is a multi-year journey that requires careful planning. It’s not just about deploying new software; it’s about evolving the bank’s operating model, skills, and processes alongside technology. Below is a strategic roadmap outlining key phases and considerations for banks aiming to transform their legacy systems with an AI-enabled orchestration layer and microservices architecture.

1. Vision and Assessment

Define the Target State: Start by clearly articulating the target architecture and business objectives. For example, the vision might be “Within 5 years, enable real-time processing across all products, a 360° customer view, and the ability to launch new products in under 3 months.” This vision will guide the technical target: perhaps a cloud-native microservices core with an orchestration layer handling integration and AI decisioning. It’s crucial to get buy-in on this vision from C-level executives and board members – modernization must be a strategic priority, not just an IT project.

Assess Current Systems: Perform a comprehensive audit of existing systems, their capabilities, interdependencies, and pain points. Identify which systems are core to keep (for now) and which are causing the most friction or cost. For instance, maybe the payments switching system is modern enough to retain, but the deposit core is a major bottleneck. Also assess data architecture – where data resides, quality issues, etc. At this stage, gather metrics: maintenance costs, change turnaround times, outage frequencies, etc., to build the business case. Often, findings like “70% of IT spend is on maintaining COBOL systems”or “Customer onboarding requires 5 disconnected systems” will underscore the urgency.

Identify Quick Wins and Priorities: Through the assessment, pick one or two areas that could deliver quick value by orchestration. It might be something like integrating customer data for a better view (which an orchestration layer could do early by federating data via APIs) or improving a specific customer journey like loan approval time by automating a process. Quick wins are important to build momentum and demonstrate value. Also prioritize which legacy systems to target first for modernization based on business impact and feasibility – for example, perhaps modernizing the API layer for channels is a top priority, or replacing a failing ledger system.

2. Architecture & Partner Strategy

Choose an Architecture Approach: Decide on the high-level architecture: are you going with a progressive renovation (layer new orchestration and gradually replace parts of core) or a greenfield build (build parallel new stack and migrate users over)? Many banks opt for progressive, as discussed. Define the role of the orchestration layer – e.g., It will provide an API gateway, real-time event streaming, and host all AI services. Outline the microservices domain model – breaking the bank’s functions into domains (payments, customer, accounts, loans, etc.) that could be implemented as separate services or modules.

Select Key Technology Platforms/Partners: Based on the roadmap, evaluate which external platforms or vendors could accelerate your journey. This might include:

- Core banking platform (like Thought Machine, Mambu, Temenos, etc.) if replacing or upgrading core.

- Orchestration / Integration layer tech – e.g., adopting a cloud integration platform (like Mulesoft, IBM Cloud Pak, or an open-source stack with Kubernetes + Kafka + API gateway).

- Cloud providers – choosing primary cloud partner(s) and determining which workloads go to public cloud vs. private. Many banks adopt a multi-cloud or hybrid strategy (using AWS, Azure, GCP but also keeping a private cloud for sensitive data).

- AI/Analytics tools – decide on tools for machine learning (e.g., Hadoop/Spark environment or cloud AI services, etc.) and how they integrate.

- Security & DevOps – ensure choices for CI/CD pipelines, container orchestration (Kubernetes), and cybersecurity frameworks are in line; these are crucial for operating a microservices environment securely. It’s often wise to run RFPs or PoCs with a few vendors. For instance, try out a payment orchestration platform on a small scale to see results.

Plan Data Management and Migration: Data is the hardest part in many transformations. Develop a data strategy early: will you create a new data lake or real-time data hub to aggregate from legacy systems? Possibly the orchestration layer can serve as an interim data hub by pulling data from silos and caching it. Outline how data will be migrated from legacy to new systems – perhaps using a phased approach where new transactions post to both new and old cores (dual run) until validation is done, then cut-over. Also consider data governance – an orchestrated world still needs a single source of truth for certain data. Many banks employ a “strangler pattern” where new microservices gradually take over read/write for certain data domains from the monolith, effectively “strangling” the old system’s role. Plan these domain data migrations carefully.

Establish Governance and Teams: Set up a transformation office or similar governance. This ensures cross-department coordination (IT, operations, risk, business units all aligning). It’s crucial to also reorganize tech teams towards an agile product-aligned model. For example, create squads for “Payments API” or “Customer Onboarding Journey” that have both business and IT people. Each squad can be responsible for the microservices and processes in its domain. This mirrors how fintechs operate (autonomous agile teams) and will be enabled by the new architecture (since microservices allow independent development). Also, upskill or hire for needed expertise: cloud engineers, enterprise architects familiar with microservices, data scientists for AI, etc.

3. Implementation Phases

Phase 1: Foundation (Year 1) – Build the core orchestration layer and foundational infrastructure:

- Set up the API gateway and developer portal for internal/external APIs. Perhaps implement an API management tool and publish initial APIs (even if they wrap legacy for now).

- Establish a cloud or container platform (e.g., Kubernetes cluster on prem or on cloud). Pilot moving a couple of non-critical services to run on it to gain experience.

- Implement a data integration backbone – for instance, stand up Kafka for streaming events, and begin streaming some data (like transaction logs) to a new data lake for analytics.